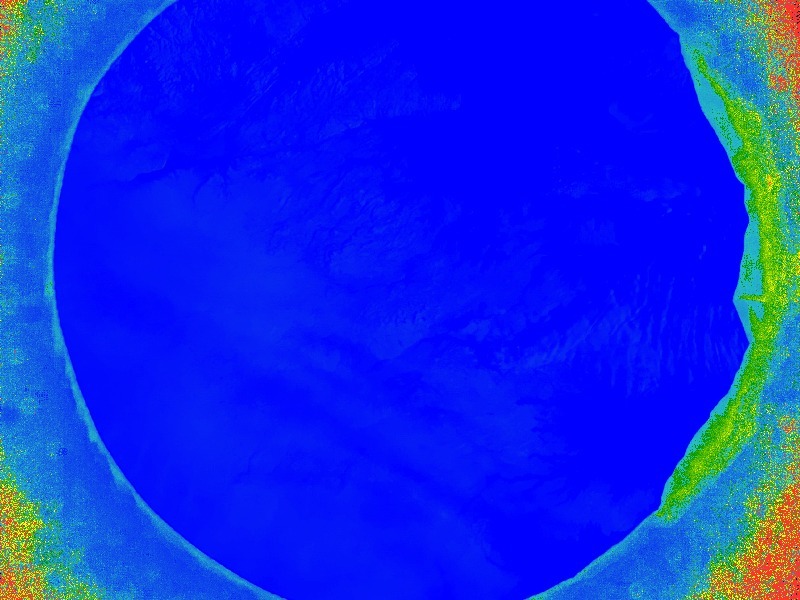

I am a middle school teacher looking to use NDVI for the first time with my code club students. The picture below of the coast of Newfoundland, Canada was taken with a Raspberry Pi Noir near-IR camera as part of the 2020 Astro Pi: Mission space lab competition, and we wanted to use NDVI to infer the relative health of plant populations at different latitudes. However, when we use the NDVI colorized preset on Infragram as in the photo above, we can barely see any of the plant population which we know are present. How can we modify the NDVI settings to enhance the appearance of plant populations? Thanks for any help you can provide!

May I ask what material your camera is housed in? Some materials have very high NDVI values, and it looks like this material is so high in comparison to the center of your image that it might be throwing the calculation off.

I cropped your image and dropped it into Infragram, and I'm still getting really low NDVI values for the visible land. It is worth noting that areas of barren rock, sand, or snow usually show very low NDVI values (like 0.1 or less).

Is this a question? Click here to post it to the Questions page.

The Raspberry Pi is housed in an aluminum enclosure, but the camera points out an airlock porthole on the International Space Station; I am unsure what material surrounds the airlock.

As the prevalence of snow and porthole material in the prior image may have lowered the NDVI values, I tried cropping another photo of Southern Italy and using the NDVI blue filter colorized preset, but not much of the land shows up. Should I be multiplying Red or Blue in the NDVI equation to enhance the appearance of land?

Is this a question? Click here to post it to the Questions page.

Reply to this comment...

Log in to comment

@cfastie i'm wondering if you might advise on this...

Reply to this comment...

Log in to comment

This is a great question. I don’t have much information about how these photos were made, but I will make some assumptions. I assume the Pi NoIR camera (with no IR cut filter) did not have any other filter added to it (because no mention was made of an added filter). So the photos taken are color photos (three channels: R, G, B) and each channel captures one color (R, G, or B) plus lots of the near infrared in the scene. So each channel is a mix of visible light of one color and near infrared light. (Cameras have an IR cut filter because silicon sensors are sensitive to near IR which would ruin the photos.) There is also no information about how much of the light captured in each channel is visible vs. NIR.

Producing NDVI images requires two photos (or channels) of the same scene: one visible and one near infrared. If my assumptions are correct, you don’t have this and cannot make NDVI images.

If my assumptions are not correct, my answer should be very different.

Chris

@cfastie

Hi Chris,

Thank you so much for taking the time to consider my problem! I'm afraid I am a relative NDVI neophyte and therefore I do not know how much of the light captured is visible vs. NIR, however, I revisited the Astro Pi hardware page and can confirm the Pi NoIR does not have an IR filter (so near-IR light is captured), and it also does have a small plastic blue filter specifically to observe chlorophyll production in plants:

I have included a screenshot of the previous photo unedited with the color adjustment and EXIF info included; it seems there are 4 distinct peaks, one of which (light blue) is overlapping with the red peak; I assume this is the near-infrared light? If so, I assume the "R-B" portion of the NDVI equation is meant to leave only the IR light, while the "R+B" is meant to account for all light absorbed? If such is the case, could we simply add value (e.g. +1/2) to this equation to "brighten" the image and enhance any IR light?

I mention this because one of my students found the following wiki page regarding Infragram with Raspberry Pi (), and he noted the equation displayed at the top of the page was not the same as the NDVI equation we had been using (R-B/R+B), however, I was unable to find justification for this discrepancy, and I remain unsure what the implications for arbitrarily adding value to the equation would be (again, I assume this is to "brighten" the image and adjust for the limitations of the camera?); any clarification you could provide would be greatly appreciated!

Thank you again for taking the time to consider our problem, and if there is any further information I can search for to help answer it, I will be more than happy to provide it.

Regards, Brendan Stanford

Is this a question? Click here to post it to the Questions page.

Brendan, That's good news. A Rosco 2007 filter was somehow attached to the lens of the RPi camera. That filter passes blue and NIR light but not much red. So the only light available to be captured by the red channel is NIR (all of the channels are sensitive to NIR). The red channel should therefore be a good record of how much NIR was reaching the sensor. The blue channel will capture blue light but it will also capture lots of NIR light (there is no way to prevent this). So in theory the camera has a channel with mostly NIR (red channel) and one with a mix of blue and NIR.

So why don't your photos produce excellent NDVI images? There are a couple of problems with this camera setup.

1) The blue channel, which is supposed to be your visible light channel, is contaminated with NIR light. So it is brighter than it would be if it were a perfect record of only how much blue light there was. It is not easy to figure out, for every pixel in every photo, how much contamination there is. The proportion of NIR:blue will not be constant either among pixels in one photo or among photos.

2) All consumer camera sensors are sensitive to NIR light, but they are not as sensitive to NIR as they are to any color (R,G,B) of visible light. So the red channel, which is supposed to be your NIR channel, will never be as bright as it should be if it were faithfully recording how much of the incoming light was NIR. It cannot be compared directly to the brightness of the blue channel to determine the ratio of NIR:blue in a scene.

3) Your camera was taking photos through a window which includes four pieces of glass (five inches thickness from inner to outer surface) some of which have special coatings to reflect certain wavelengths. You can probably ask the Astro_Pi organizers to tell you what the spectral transmission is across the visible and NIR wavelength range. Chances are good that the coatings were engineered to give a natural color balance in the visible range, but if those coatings blocked some NIR it probably did not bother anyone. So there might be a third reason that the NIR:blue ratio is lower than what it should be.

4) Your camera was taking photos of the ground from the other side of the entire blinking atmosphere. The sky is blue because air scatters blue light. So every scene of earth from up there will have a bluish haze which varies strongly with the amount of water vapor (and other things) in the air and with the angle of the sun. Every pixel in your photos will be variably contaminated with this blue light. That's why the original NDVI images in the 1970s (and all satellite NDVI since then) used a red channel for visible light. Red is scattered much less by the atmosphere. Sending a camera to space to capture plant health information with a blue filter instead of a red filter is either careless, negligent, foolish, or all three (all three). This is reason number four that the NIR:blue ratio will be lower than it should be.

Any one of these four reasons might explain why NDVI is sometimes computed with a fudge factor that inflates the observed value for NIR. Without such an adjustment your photos will not produce NDVI images anything like the legacy NDVI scientists have used for five decades.

Your problem is that the fudge factor will be different for different photos. The two photos you included have different NIR:blue ratios. The difference is much more likely to be due to differences in scattering or sun angle than in plant health. So you have too many unknown variables to determine if your photos record differences in plant health.

To visualize this problem, it's good to examine the photographic histogram for small areas of your photos. Select areas which you know are continuous green vegetation. display the histogram for just that area and you will see that the brightness of the red (NIR) and blue (VIS) channels are almost the same. For healthy vegetation with an NDVI of about 0.5, the incoming NIR light should be about 3 times brighter than the blue light (see https://publiclab.org/notes/cfastie/06-20-2013/ndvi-from-infrablue).

Above: Histogram for an area of green vegetation (marquee) near Embree, Newfoundland. The actual amount of NIR light (red channel) reflecting from that vegetation is probably three times brighter than the reflected blue (blue channel).

Above: Histogram for an area of green vegetation (marquee) near Petrona, Italy. The actual amount of NIR light (red channel) reflecting from that vegetation is probably three times brighter than the reflected blue (blue channel). At least in this photo the NIR channel is a tiny bit brighter than the blue channel.

So how about we just triple the NIR value for every pixel? I have tried that many times and it never works. I don't know why, but I think it is because every pixel cannot be treated the same. Pixels of vegetation with low VIS values might need a different fudge factor for NIR than pixels with higher VIS values.

I have found some success modifying the way the camera records the photo. Some cameras allow making a custom white balance preset which can instruct the camera to exaggerate the brightness of either the red or blue channel. This apparently does not blindly multiply every red channel pixel by the same factor but does something more nuanced. The RPi camera did not have a custom white balance feature the last time I looked, but someone might have added it by now. It does have other features which allow the relative brightness of color channels to be adjusted. There are several discussions of this somewhere on this website (https://publiclab.org/search?q=awb%20gains).

The actual good news is that anytime vegetation in your photos has a slight orangey hue, it might be possible to produce a somewhat meaningful NDVI image from it. I have never had any success making NDVI images using infragram.org. Ned Horning wrote a plugin for Fiji (ImageJ) that can produce useful NDVI images from lots of different types of NIR photos. Instructions for installing it are here: https://publiclab.org/notes/nedhorning/01-13-2016/packaged-photo-monitoring-plugins-available-on-the-github-repositoy.

The NDVI image below was made from your Italy photo without modifying the photo. In Ned's plugin, I stretched the histogram of the NIR channel with a value of 3 and used the standard NDVI formula. The NDVI values for vegetation are not as high as they should be (they never get higher than about 0.3) but the image does allow discriminating many things that are vegetation from many things that are not vegetation.

Although your original project of comparing the health of vegetation around the world might not work very well, many of your photos might reveal interesting patterns of vegetation, land use, land forms, and elevation. Making comparisons among photos will be fraught, but patterns within each photo could be valid fodder for analysis.

Maybe more importantly, your students could demonstrate how the Astro-Pi NIR camera could be designed more appropriately and scientifically for its intended mission. Same thing for the recommended image analysis software.

Chris

Is this a question? Click here to post it to the Questions page.

Thanks @cfastie for this in-depth response! Your experience is literally invaluable. Also great to see @nedhorning's name come up. I'm wondering next steps are clear for @brendan_stanford, and if any institutional outreach to Astro Pi foundation with ESA (formerly https://www.raspberrypi.org/blog/astro-pi-upgrades-launch/) might be in order regarding the filter material. The Rosco filter company itself blogged about fabrication here: https://www.rosco.com/spectrum/index.php/2018/03/rosco-filter-helps-students-observe-earths-vegetation-from-space/. @warren do you have any memories or points of contact regarding the choice of filter color discussion with Astro Pi Foundation to add in here? I don't see that i have any email records myself.

I'm wondering if we might invite people working with NDVI into a video call to really compare notes on the different software options for processing images into NDVI. The 3pm open call slot (see https://publiclab.org/open-call) on Tuesday May 26 is already booked by the "direct download of satellite images using ham radio techniques" crew (https://publiclab.org/questions/sashae/05-15-2020/is-anyone-working-on-the-reception-of-satellite-imagery-using-ham-radio-methods), so we could take 2pm https://everytimezone.com/?t=5ec9b900,438 or 4pm https://everytimezone.com/?t=5ec9b900,4b0 ? Here are the times I have available to facilitate: https://www.when2meet.com/?9165414-2DwFU please fill in your availability if interested. Thanks!

Is this a question? Click here to post it to the Questions page.

Reply to this comment...

Log in to comment

Thanks @liz and @cfastie for your advice and patience! Next steps are indeed clear; after some experimentation with @nedhorning's photo monitoring plugin, I was able to replicate the NDVI image Chris created by selecting single image index processing and stretching the IR band by a factor of 3. I was also able to create the NDVI image below of the original photo of Newfoundland, though I doubt it will be one of those we can make much use of given the snow and lack of an orange hue in the original photo. Nevertheless, we are much better off than when we got started, and my students and I greatly appreciate your assistance Chris!

Is this a question? Click here to post it to the Questions page.

Great news @brendan_stanford ! Thank you for closing the loop on this, and best of luck to the entire classroom :)

Reply to this comment...

Log in to comment