Evaluation

Welcome! This is the home for all things related to evaluation at Public Lab. Many different feedback efforts are ongoing in different sectors and we try to coordinate our efforts to minimize survey fatigue or redundancy. @liz leads the evaluation team! Ask questions below to find out more.

Follow along with current work

See all recent work tagged evaluation here, or click on the boards below to see progress on:

1) our Snapshot Evaluation and Evaluation Framework (May 2015-May 2018) generously supported by the Rita Allen Foundation

2) "pulse" feedback collected at in-person events with the Listen For Good 5 questions plus 6 of our own custom questions

3) year-round ongoing evaluation processes

Trello Board

Trello Board

Trello Board

Here's a link to the staff board for developing year-round evaluation tasks, being integrated into the third Trello board above: https://app.asana.com/0/645629000328698/board

What are we measuring against?

All evaluation is tracked against our Logic Model, and our terms in Logic Model are defined in our Community Glossary.

How are we measuring?

Community Surveys

Our Annual Community Survey is delivered over email lists and posted on the website.

We also survey segments of our community who are having shared experiences:

- Software Contributors, example: 2017 survey, delivered via Github

- Organizers, example: 2017 Survey, delivered via email and personal direct outreach

Stakeholder interviewing

A series of stakeholder interviews was done in 2017! You can read them here:

| Title | Author | Updated | Likes | Comments |

|---|---|---|---|---|

| Interview: Yvette Arellano | @stevie | about 7 years ago | 3 | 0 |

| Interview: Jim Gurley | @stevie | about 7 years ago | 1 | 0 |

| Interview: Nayamin Martinez and Gustavo Aguirre Jr. | @stevie | about 7 years ago | 2 | 0 |

| Interview: Karen Savage | @stevie | over 7 years ago | 0 | 0 |

| Interview: Ramsey Sprague | @stevie | about 6 years ago | 1 | 0 |

| Interview series with Grassroots Community Organizers | @stevie | over 7 years ago | 2 | 0 |

| What fuels a movement? | @stevie | almost 8 years ago | 5 | 0 |

Online analytics

The ever-growing Data Dictionary describes the datasets that are available for analysis. Created by @bsugar, maintained by @bsugar and @liz.

Topics

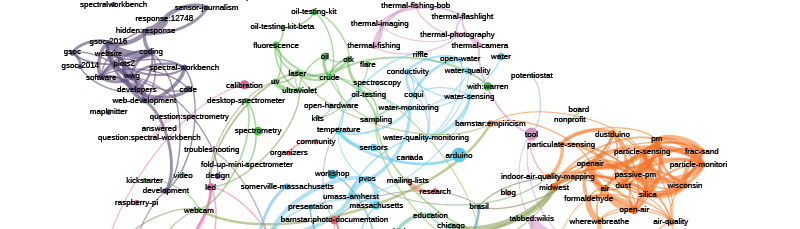

Conversational dynamics on mailing lists:

User interface design

See the User Interface page for more on design work towards user interface and user interaction improvements. This is an area where many people are offering feedback!

Questions

| Title | Author | Updated | Likes | Comments |

|---|---|---|---|---|

| evaluation notes: community segments -- not what you think! | @liz | over 6 years ago | 0 | 0 |

| How can I make a tag graph visualization? | @bsugar | almost 7 years ago | 3 | 7 |

| Is anyone studying or writing about the rise in Codes of Conduct in open source projects? | @warren | almost 7 years ago | 0 | 2 |

| Help with a standard mini-evaluation for assessing software outreach efforts? | @warren | about 7 years ago | 1 | 11 |

Related work

Older page content

From 2014 via @liz: brainstorming possible community metrics

From 2011 via @warren, interesting! Read on:

On this page we are in the process of summarizing and formulating our approach towards self-evaluation; as a community with strong principles, where we engage in open participation and advocacy in our partner communities, this process is not that of a typical researcher/participant nature. Rather, we seek to formulate an evaluative approach that takes into account:

- multiple audiences - feedback for local communities, for ourselves, for institutions looking to adopt our data, for funding agencies, etc

- reflexivity - we may work with local partners to formulate an evaluative strategy, and this may often include questionnaires, surveys, interviews which we take part in both as subjects and as investigators

- outreach - by publishing evaluations in a variety of formats, we may employ diverse tactics to better understand and refine our work; its publication in diverse venues (journals, newspapers, white papers, video, public presentation, etc) offers us an opportunity to reach out to various fields (ecology, law, social science, technology, aid)

- location - our evaluations should be situated in geographic communities, examining the effects of our tools and data production in collaboration with a specific group of residents

Goals

Good evaluative approaches could enable us to:

- quantify our data and present it to scientific, government agencies for use in research, legal, and

- provide rich feedback for field mappers (in the case of balloon mapping and other public scientists to improve their techniques

- assess the effects of our work on local communities and situations of environmental (and other types of) conflict

- involve local partners in the quantification and interpretation of our joint work

- ...

Approaches

We're going to use a few different approaches in performing (self-)evaluation -- each has pros and cons, but we will attempt to meet the above goals in structuring them.

Approach A: Logbook questionnaire

The logbook is an idea for a Lulu.com printed book to bring on field mapping missions for balloon mapping.

Although this strategy can be reductive, compared to interviews, videos, etc, its standard approach yields data which we can graph, analyze and publish for public use. The results will be published here periodically. Any member of our community may use them for fundraising, outreach, or for example to print & carry to the beach to improve mapping technique.

Read more at the Logbook page.

A mini version of this questionnaire was used by Jen Hudon as part of her Grassroots Newark project and can be found here:

Approach B: Community Blog

The community blog represents a way for members of our community to ... critical as well as positive...

To contribute to the community blog, visit the Community Blog page

Approach C: Interviews

We're beginning a series of journalistic/narrative interviews with residents of the communities we work with. Read more at the interviews page.