When I first saw the near-infrared (NIR) photos acquired from DIY modifications [1] on the PLOTS website I was curious why they had a magenta hue to them. Initially I thought the NIR filter was letting in non-NIR light but later realized it was due to improper white balance. This note provides an overview of what white balance is and has some suggestions on how to use white balance in NIR images.

What is white balance? White balance [2] is a process in which an adjustment is made to an image to correct for variations in the quality of light so that objects that are white in the scene appear white in the image. You may have noticed that when you look at colors under florescent lights (for example paint samples in a store) they look different when you look at them outside or under incandescent lights. That's because the light coming out of a florescent bulb has different spectral characteristics than sunlight or an incandescent bulb so colors will appear different. This effect is usually more noticeable in photos from a digital camera. Have you ever taken a picture and the colors came out quite different from what your eye perceived? That is usually due to an inaccurate white balance.

How do cameras deal with it? Most cameras have some capacity to deal with white balance issues. The simpler cameras have options that include different illumination sources such as florescent, daylight, tungsten and most offer an auto white balance option. More advanced cameras have a manual white balance option that allows the photographer to easily set a custom white balance to adjust for just about any lighting situation. A problem occurs with NIR cameras because the camera is trying to do a white balance correction but it is assuming only visible light is hitting the sensor. This produces a nonsense correction which in most cases seems to create an image with primarily magenta tones.

How to use white balance with NIR photos Since the cameras we use for NIR photography are not calibrated for dealing with white balance in the NIR spectrum the predefined and auto options will not work properly so some sort of custom adjustment is the only option. If you camera has an option to make a custom white balance adjustment that is quite easy. You need to take a picture of a reference that you expect to have a even response across the three sensor channels. For visible photography people use a white or gray card. I have experimented with an 18% gray card and the results look good. I have also used green grass which seems to work quite well. If you don't have a gray or white card with you you can look for a substitute such as a painted wall.

If you don't have the option to set a custom white balance in your camera you can adjust it after the image is acquired using image processing software such as Photoshop or Gimp with the UFRaw plugin as long as you acquire the images in RAW mode. Most low-end cameras do not have a RAW option but if you own a Canon camera there is a good chance that you can add that capability using CHDK [3]. The process to do white balance corrections using software is similar to the in-camera process. You need to have an image with a feature in it that can be used as a reference. For example, you could take a photo of a gray card under the same lighting conditions as the images you want to correct and then using the software you can define the reference and use that to correct the other images. There are tutorials on the Internet that explain how to do this software correction using different types of software.

How important is it? I'm not really sure how important this really is but I think it's a good idea to be aware of it. I personally prefer to do white balance corrections since it provides a “truer” rendering of what is being imaged. The magenta images give the impression that there is more information in the image than there really is. I also happen to like the way the corrected images look.

Examples: At the top of this note are a few examples of images using different white balance settings. I took these during a cloudy day but they provide an indication the effects of white balancing. The camera is a Canon A2200 that was converted at LEAFFEST [4]. From left to right: 1) Color image using auto-white balancing 2) NIR image using auto-white balancing 3) NIR image using an 18% gray card as a reference for camera-based custom white balance 4) NIR image using grass as a reference for camera-based custom white balance

[1] http://publiclaboratory.org/tool/near-infrared-camera [2] http://www.cambridgeincolour.com/tutorials/white-balance.htm [3] http://chdk.wikia.com/wiki/CHDK [4] http://publiclaboratory.org/notes/nedhorning/9-25-2012/canon-a2200-nir-conversion

15 Comments

Ned, This is a dramatic result. It is startling to see that the color can be completely removed by applying a custom white balance, and also that this can be done by simply pointing the camera at grass while the camera computes the custom white balance. Do you have any idea how this will affect the resulting NRG (false color IR) or NDVI images?

Much of the manipulation done during the custom white balance procedure might be to laterally slide the R, G, and B histograms independently to remove any tint bias in the image. It might not change the shape of any of the individual histograms very much. When an NRG or NDVI image is produced, only one of the channels (usually blue or red) from the infrared photo is used (in combination with data from a normal color image of the same scene). So the relationship among the three channels in the infrared photo might not matter much.

I have not done any tests yet, but I will hypothesize that a good white balance on the NIR photo will not have much effect on the NRG or NDVI images that result. This might be especially true when the new histogram stretching options of your ImageJ plugin are used while producing NRG and NDVI images. These options intentionally widen and center the histogram of the single NIR channel being used, and I assume have more impact on the outcome than the white balance of the original NIR photo. Chris

Is this a question? Click here to post it to the Questions page.

Reply to this comment...

Log in to comment

Hi Chris,

Thanks for your comments. For me the most dramatic impact of white balance was the realization that there is very little difference in intensity of light received across the R,G,B channels of the NIR cameras that we're using. In theory the white balance should be doing more than simply sliding the histograms. It should be a more detailed adjustment/transformation but I haven't looked into how the camera actually deals with it. Even if the effects appear minimal applying white balance should produce a more accurate representation of the NIR reflectance properties of what we're imaging.

My claim that there is little difference in intensity in the R,G,B bands of the NIR cameras assumes that the gray target I'm using to set the white balance appears “gray” into the NIR part of the spectrum. In other words I'm assuming that the gray target is wavelength neutral and affects the detection of visible and NIR wavelengths in the same way. Maybe that would be a good test for the PLOTS spectrometer. Grass is not a neutral target in the visible wavelengths but I expect it's reasonably neutral across the NIR wavelengths we're using.

The effect of using white balance on the NDVI calculation will probably be minimal if the histograms are stretched. In fact, if you stretch the histograms I wouldn't expect much difference between any of the NIR channels when no white balance is applied.

Until we are working with calibrated cameras we will not be able to produce much better than relative NDVI images. We will be able to see higher and lower NDVI within an image but it will be difficult to compare images acquired using different cameras, filters, white balance, or other settings. That said, I think what we have now is pretty powerful.

Reply to this comment...

Log in to comment

I've been racking my brains to figure out a way to measure the spectral pass of each color of the Bayer filter (R, G, B) because some images suggest to me that they are in fact optimal for different IR ranges -- images like these:

https://mapknitter.org/map/view/savethebay-providence-infrared

which show rocks and vegetation as, respectively, pinkish and purplish. Notice that the rocks used to shore up the beach (presumably granite?) are pinker than those sitting right at the waterline. This picture shows that most clearly. I'd love to determine if we're actually able to get 2 bands instead of just one broad NIR band. Ideas?

Is this a question? Click here to post it to the Questions page.

Reply to this comment...

Log in to comment

Jeff - I think a good way to get a handle on this is to apply a white balance before imaging. Again, assuming that the target you use for white balance is neutral across the wavelengths you are detecting then if you see color variation in the resulting image you are getting more than one band of information. I expect that the colors you are seeing are largely due to a wonky white balance.

I also want to know the spectral qualities of the cameras we're using so we can calibrate them to measure radiance or at least characterize the signal to get closer to quantitative measurements. I'm working on contacts to help with that.

Reply to this comment...

Log in to comment

OK, cool. Re: calibrating cameras, would we be able to point them at a calibration sheet with a known amount/spectrum of light and take a calibration image, or adjust white balance to the calibration sheet?

Is this a question? Click here to post it to the Questions page.

Reply to this comment...

Log in to comment

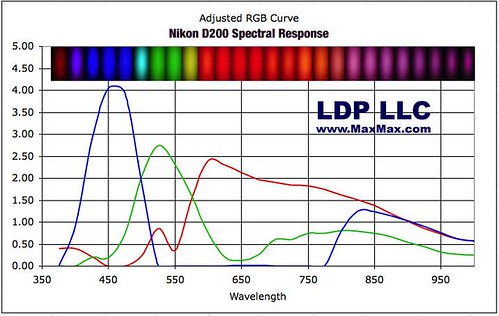

The three channels in an NIR photo will record different proportions of NIR wavelengths (e.g., 700-1000 nm) because the Bayer filters are passing different proportions of these wavelengths. The particular wavelengths passed are haphazard and not by design because these wavelengths are normally blocked by the IR filter that we removed. Below is an example for one camera model. Other cameras could be very different.

In addition, the film used as a visible light blocking filter leaks some wavelengths in the visible range which will be added to the signal picked up in one or more of the RGB channels of an NIR image.

So the three channels in an NIR photo will differ from one another for two hard to control reasons. It seems that the green channel in our DIY NIR images is often darker (histogram shifted to the left) than the red and blue channels. Shifting the green histogram to the right removes the pink tint in the images (try this in Photoshop). The histograms for both the red and blue channels are usually similar, so either one could be used successfully to make an NRG or NDVI image. If the goal is to use one of these channels to make NRG or NDVI images, it may not matter which is used. Because the NIR image may be dark (our NIR cameras require more light to make an exposure than unmodified cameras) whichever channel is brighter might be the best one to choose.

Reply to this comment...

Log in to comment

What you describe seems like white balancing which I think of as more of a normalization process than calibration. White balance using a standard target is a step in the direction of being able to compare different camera / filter setups but that alone won't provide enough information for calibration. Calibration, at least the way I think of it, is the process of converting the pixel values to a physical measure which in our case is spectral radiance - basically light intensity at different wavelengths. That's a tougher problem but if we can figure out a DIY approach to solve it that would be cool.

Would we be able to use the PLOTS spectrometer to see if a gray card or something similar has an even response (flat spectrum) into the NIR portion of the spectrum?

Is this a question? Click here to post it to the Questions page.

Reply to this comment...

Log in to comment

Yes, that sounds pretty feasible. What's nice is that the spectrometer measures a whole column of pixels so perhaps we can measure 2 regions at once, and avoid exposure compensation or compression problems. Not sure, just thinking ahead.

Is this a question? Click here to post it to the Questions page.

Reply to this comment...

Log in to comment

Chris - If you are referring to JPEG images acquired using one of the camera's internal white balance settings then I don't think the histograms for the different channels are telling us much since we have no idea how the images were adjusted by the camera. I expect those histograms are whacked out during the processing so I don't think we can use those as a reference. The image we see isn't what the sensor (with the Bayer filter) sees and in some most cases it's not even close. The images are processed to adjust for a number of factors so the image better resembles reality.

There is definitely some visible light leaking through the film filters but I'm not sure how significant it is. It would be nice to put some number on that. When I hold the film up to a light I can see the light but it's very dim. When I hold a glass IR 850 filter to the light I don't see any light come through.

We should have a meet-up to test some of these things. I have three filters now, two professionally installed, and there are some simple tests we could run to better understand what's going on and what it means from a practical standpoint. I'll also step up my effort on the calibrate side of things.

Reply to this comment...

Log in to comment

I agree re: meetup -- it'd give me more incentive to keep working on the IRCam too.

If there are any aerial imaging calibration garden plots in the northeast... the only ones I know of are in Kansas, i think.

Reply to this comment...

Log in to comment

Actually what about the Harvard forest?

Is this a question? Click here to post it to the Questions page.

Reply to this comment...

Log in to comment

Another possibility for a forests area is Hubbard Brook Experimental Forest in New Hampshire but that might be a nightmare getting permission unless we have a research agenda.

Reply to this comment...

Log in to comment

Calibration gardens – flying over a soybean field is a lot easier than flying over a forest. Soybeans are also a lot more homogeneous, and are easier to measure photosynthesis in. I guess the idea is to fly over a field for which photosynthesis had very recently been measured with the goal of calibrating the values of NDVI derived from the aerial images? Maybe Dorn can lead us to such a field next summer. Dorn will soon have results for his cover crop field for biomass of each species is each treatment plot. That may be as good as any other calibration field.

Calibration of the cameras – Can this be done by taking photos of a light source of known spectral qualities? RAW images could be captured. I am not sure why we want to do this.

Ned – You are correct that a jpeg photo does not tell us much about which wavelengths passed through the exposed film filter and then through the Bayer filters. A RAW image file does not have much more information about this. Even knowing which visible wavelengths are leaking through the film filter will not help unless we know they are all being captured in (e.g.) the red and green channels, so we know to use only the blue channel to produce NRG or NDVI images.

To find out how good the PLOTS IR camera is at producing NDVI, the first step might be to take images with all three of your NIR camera pairs and compare the NDVI made with them.

I wonder whether the PLOTS IR camera tool will ever be able to produce anything but relative values for NDVI unless two targets with known spectral reflectance are included in every photo.

Is this a question? Click here to post it to the Questions page.

Reply to this comment...

Log in to comment

I agree a forest is not a good calibration site. For aerial and satellite sensors a popular calibration site is White Sands in New Mexico. It has well documented static reflectance properties and it's huge, flat and not all that sensitive to specular reflection. If we want a vegetation site then maybe maybe short grass on flat terrain like a turf farm or golf course would work. Helpful properties would be dense cover, few shadows, and level. Here's a link if you want to see some other possibilities: http://calval.cr.usgs.gov/satellite/sites_catalog/

If we wanted to calibrate the images to NDVI we'd probably need to have a spectrometer to measure radiance so we could calculate reasonably accurate NDVI for test points and then develop a relationship between image values and NDVI. Another, more direct approach if we are interested in a physical variable like biomass would be to calibrate directly with biomass. That's basically how we map above-ground forest biomass. We talked a bit about doing this with Dorn when we were out there but I guess interest waned.

To calibrate the cameras what you wrote is more or less how it works. You take a picture of very narrow wavebands of light with known intensity. There are a few extra steps but at the end you have a camera that can record radiance for the R,G, and B bands. You also know the spectral response for each band recorded by the camera. Knowing the physical characteristics of a camera's sensor isn't critical for most applications but it is handy for analysis.

Reply to this comment...

Log in to comment

Hi all, warm Greetings

I am new in NIR photography so i have some questions regarding the white balencing, caliberation etc. Actually i have Nikon D70s Camera modifed for NIR imaging. I got replaced the internal hot mirror filter with the filter that can pass Red and NIR light only. i.e my camera can now pass only NIR and Red band of light. My purpose is to generate the NDVI image from the image that i take with this modifed camera. Actually i have to convert the digital DN values to the reflectance for calculating NDVI. I am not sure how to do this?

Another thing is that i have to separate RED and NIR from the single image. Because NIR is mixed in each band (RGB) and the sensitivity of the three different Bayer filter might not be same for NIR light. So how can i separate NIR and RED from the image? Any other suggesstion on getting NDVI with this modifed digital camera will be highly appriciated. Regards Babu

Is this a question? Click here to post it to the Questions page.

Reply to this comment...

Log in to comment

Login to comment.