There are some discussions going on about how we might approach environmental regulation as a community -- understanding it, critiquing it, improving it -- here are my thoughts:

Many folks are familiar with online, collaborative writing platforms like GoogleDocs that offer detailed version tracking and line-by-line commenting. Etherpad is another example, it's the open source equivalent that runs every "Talk" page on publiclab.org. Some people may also be familiar with online platforms offering the same features for collaborating on more specialized text (programming code) such as github.com. There are some experiments emerging in the legislative space attempting to use the simplest line-by-line commenting features to collaboratively build a broader understanding of and organize response to existing texts, for example, in NYC, where activists are organizing public discussion on specific policy points in the City Charter as part of the Charter Revision Commission process: https://via.hypothes.is/https://sites.google.com/view/nyccharterreadinggroup. These are parts of a trend of democracy activists around the world running experiments to involve greater numbers of people in contributing to “governance beyond the vote” by collaboratively writing the documents of governance such as constitutions and legislation. For some examples, visit: https://catalog.crowd.law/. Huge amounts of work have gone into making progress at this scale, and it's been a long time coming--for a glimpse into an earlier moment in legislative collaboration and version control, see this 2012 post by SunlightFdn full of passionate prototypes and broken links.

I was clued in by Digital Humanities professor Jo Guldi to an approach called “text mining” used to compare and analyze large bodies of words such as parliamentary debate transcripts (Barron, Huang, Spang, and Dedeo, 2018) and legal texts (Funk and Mullen, 2018). If this sounds interesting, consider checking out some of the methods described by Julia Silge in Text Mining with R. Overhearing our discussion, @Bronwen The Librarian offered an analog precedent for text mining called a “concordance,” a kind of index that assists in engaging in a quantitative way with text (think The Bible), such as how many times a certain word appears. Quantitative approaches to text can offer tracing of lineage, such as what was written first and went on to influence many others, and track subtle changes over time, for instance in tone or other indicators of culture change.

What does this have to do with Public Lab?

I'd offer that an open culture approach to policy and regulation might experiment with BOTH text analysis on existing policy AND detailed version tracking + line-by-line feedback for collaboratively developing future policy. This would be consistent with the way Public Lab extends open culture practices of transparency and scaffolding interaction to specialized domains in order to broaden participation. Some may recall me muttering the phrase "the regulatory landscape is more fragmented than the ecological landscape." Our community collaborates to share knowledge about environmental health issues across regions; this translocal collaboration should include policy, and we need a set of tools that enables us to compare, analyze, and track policy points that correlate with best (and worst) environmental health outcomes.

Quick start

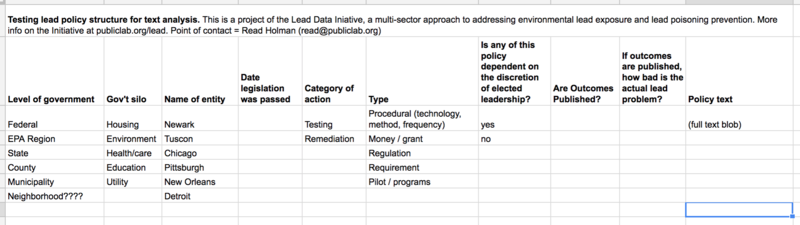

Yesterday, in conversation with @read_holman, the Public Lab Fellow who is leading a nationwide lead data initiative, we briefly experimented with making a spreadsheet where we could add attributes to policy text. He started to explain just a few of the relevant attributes that would be needed to visualize variation in regulatory approach alongside health outcomes in the cities he's engaging which include Houston, Tuscon, Detroit, etc. In the screenshot below, see the columns we mocked up for level of government, domain (or gov't silo such as housing, environment, utility, etc), etc:

The columns above are just a sketch of a first draft meant to invite more people into brainstorming on this idea. I suspect it is possible that text mining could identify patterns in policy by taking other attributes into account and comparing across regions. What if we paired up domain experts like @read_holman (who works on lead and public health) with text miners to seek patterns? Are there certain policies that exist at some levels of government that disrupt the function of otherwise good policy that exists at other levels? Can we identify types of policy approaches that are easily gamed, underminable by elected officials, or have been shown to repeatedly fail somewhere along the way to protecting public health from lead? Can we map these patterns and organize to address this?

Integrating decentralized publics into centralized policy-making processes

Stepping aside from what patterns text mining may unearth, here are three more basic options for structuring public engagement around policy:

- Perhaps if text miners collaborate with domain experts to sort out and organize the policy in machine readable ways for the purpose of their analysis, a secondary yield might be that it becomes easier for the rest of us to simply compare policy on the same topic from different municipalities, and to structure public comment around specific policy points that appear again and again in cities, county, and state policy.

- Separately, somewhere in the world, it is likely that some open source group is already running workable, scalable public policy discussions supported by text in accessible formats with line-by-line commenting, and maybe accompanied by some visualizations. We could look into these approaches (by saying hi to some folks featured in Crowd Law Catalog!) and try one or two.

- Lastly, we could at least try using the same version control and line-by-line commenting tools for environmental health policy that we already use for other writing. This is so 2012, but yet it would still be more helpful to use Github to organize efforts to broaden comprehension and collaboration than a pile of PDFs.

I would be excited to see some experiments along these lines. I would be even more excited to hear your ideas! What do you think?

3 Comments

Interesting! made me think of @bsugar and Thomas Levine (whose profile I can't find!)

Reply to this comment...

Log in to comment

Interesting idea. certainly a good way to get buy in and to discover issues/ideas that may be buried.

Reply to this comment...

Log in to comment

Thanks for posting this @liz. A good intro to the topic and great conversation starter.

I've been noodling on what a specific example might be (within the domain of distributed lead-related policy making). It'd be nice to identify a fairly straight forward item to test this approach with. To that end, Medicaid might make for a interesting first area of study (first for us, probably not for the world out there). Medicaid has long been an area of research interest for folks in health care as its state run with federal stuff thrown in. A lot of people out there studying the effectiveness of particular Medicaid practices, comparing across states, etc. I imagine someone out there is doing that comparison via text mining, but not sure. Even smaller chance that someone is doing text mining on lead-related Medicaid policies. So... might be a good opportunity.

The way it works: Medicaid requires (States to require) children to be tested. States can vary the number of tests required and the ages of those tests and level of enforcement / reimbursement involved to ensure this actually happens. Doctors that find lead poisoning in that testing are supposed to report it to the county health department which is supposed to do a home inspection to find the source of that lead. Note the multiple uses of the phrase "supposed to"... But to be clear: The entire public health lead poisoning prevention infrastructure -- in every county in the country -- is currently built on a child showing up to the doctor and getting tested for lead by that doctor (cynically, people like me are like: "Why are we using children as our lead detection monitors?").

So yeah, could be interesting. I'll post a few links here for background, though I haven't done a deep dive into this topic yet:

Medicaid: Lead Screening https://www.medicaid.gov/medicaid/benefits/epsdt/lead-screening/index.html

State Health Care Delivery Policies Promoting Lead Screening and Treatment for Children and Pregnant Women (5.21.18) https://nashp.org/wp-content/uploads/2018/05/NASHP-Lead-Policy-Scan-5-21-18_updated.pdf

Using Medicaid Data to Improve Childhood Lead Poisoning Prevention Program Outcomes and Blood Lead Surveillance https://www.ncbi.nlm.nih.gov/pubmed/30507770

Millions of American children missing early lead tests, Reuters finds https://www.reuters.com/investigates/special-report/lead-poisoning-testing-gaps/

I have a number of thoughts on the specific design of an effort, but that would take a lot to type out. And anyways: It'd be good to get reactions to this particular study topic I've thrown out before diving into the study design anyways. Reactions useful...

Is this a question? Click here to post it to the Questions page.

Reply to this comment...

Log in to comment

Login to comment.